Your new post is loading...

Your new post is loading...

Does big data have the answers? Maybe some, but not all, says Mark Graham...

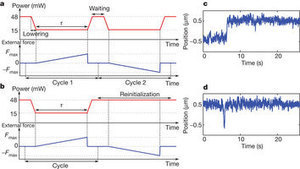

Public opinion is often affected by the presence of committed groups of individuals dedicated to competing points of view. Using a model of pairwise social influence, we study how the presence of such groups within social networks affects the outcome and the speed of evolution of the overall opinion on the network.

A universal map is derived for all deterministic 1D cellular automata (CA)

containing no freely adjustable parameters. The map can be extended to an

arbitrary number of dimensions and topologies and its invariances allow to

classify all CA rules into equivalence classes. Complexity in 1D systems is

then shown to emerge from the weak symmetry breaking of the addition modulo an

integer number p. The latter symmetry is possessed by certain rules that

produce Pascal simplices in their time evolution. These results elucidate

Wolfram's classification of CA dynamics.

Various research initiatives try to utilize the operational principles of organisms and brains to develop alternative, biologically inspired computing paradigms and artificial cognitive systems. This article reviews key features of the standard method applied to complexity in the cognitive and brain sciences, i.e. decompositional analysis or reverse engineering. The indisputable complexity of brain and mind raise the issue of whether they can be understood by applying the standard method. Actually, recent findings in the experimental and theoretical fields, question central assumptions and hypotheses made for reverse engineering. Using the modeling relation as analyzed by Robert Rosen, the scientific analysis method itself is made a subject of discussion. It is concluded that the fundamental assumption of cognitive science, i.e. complex cognitive systems can be analyzed, understood and duplicated by reverse engineering, must be abandoned. Implications for investigations of organisms and behavior as well as for engineering artificial cognitive systems are discussed.

Saint Matthew strikes again: An agent-based model of peer review and the scientific community structure

Flaminio Squazzoni, , Claudio Gandelli

In 1961, Rolf Landauer argued that the erasure of information is a dissipative process. A minimal quantity of heat, proportional to the thermal energy and called the Landauer bound, is necessarily produced when a classical bit of information is deleted. A direct consequence of this logically irreversible transformation is that the entropy of the environment increases by a finite amount. Despite its fundamental importance for information theory and computer science, the erasure principle has not been verified experimentally so far (…) This result demonstrates the intimate link between information theory and thermodynamics. It further highlights the ultimate physical limit of irreversible computation.

Many recent models of trade dynamics use the simple idea of wealth exchanges among economic agents in order to obtain a stable or equilibrium distribution of wealth among the agents. In particular, a plain analogy compares the wealth in a society with the energy in a physical system, and the trade between agents to the energy exchange between molecules during collisions. In physical systems, the energy exchange among molecules leads to a state of equipartition of the energy and to an equilibrium situation where the entropy is a maximum. On the other hand, in a large class of exchange models, the system converges to a very unequal condensed state, where one or a few agents concentrate all the wealth of the society while the wide majority of agents shares zero or almost zero fraction of the wealth. So, in those economic systems a minimum entropy state is attained. We propose here an analytical model where we investigate the effects of a particular class of economic exchanges that minimize the entropy. By solving the model we discuss the conditions that can drive the system to a state of minimum entropy, as well as the mechanisms to recover a kind of equipartition of wealth.

What do humpback whales returning to calve in the waters of Maui have to do with the ground state energy of a quantum system? Both exhibit a mathematical property called the "reduction phenomenon", which can be described very simply: mixing reduces growth, and differential growth selects for reduced mixing. The phenomenon underlies the Reduction Principle for the evolution of recombination, mutation, and dispersal rates, as well as recent results in reaction-diffusion models of dispersal. Animals returning to their birth places to give birth exemplify operation of the Reduction Principle through philopatry. In molecular genetics it is manifest as error-free DNA repair. In cultural evolution models it is manifest as traditionalism. Yet, departures from reduction are abundant, such as recombination and dispersal. How can sense be made of the complexity of outcomes? Two new papers describes mathematical results that extend the reduction phenomenon to infinite dimensional operators, and investigate departures from reduction which follow the "Principle of Partial Control". A new population statistic, the "fitness-abundance covariance", links ecological properties to the reduction phenomenon.

Recent developments in biotechnology have enabled interrogation of the cell at various levels, leading to many types of "omic" data that provide valuable information on multiple genetic and environmental factors and their interactions. The featured Web extra is a video interview with Mehmet Koyutürk of Case Western Reserve about how biotechnology can track genetic markers to advance cancer research.

An overview is given of theoretical progress on self-organization at the

nanoscale in reactive systems of heterogeneous catalysis observed by field

emission microscopy techniques and at the molecular scale in copolymerization

processes. The results are presented in the perspective of recent advances in

nonequilibrium thermodynamics and statistical mechanics, allowing us to

understand how nanosystems driven away from equilibrium can manifest

directionality and dynamical order.

We introduce a new method to efficiently approximate the number of infections resulting from a given initially-infected node in a network of susceptible individuals, based on counting the number of possible infection paths of various lengths to each other node in the network. We analytically study the properties of our method systematically, in particular demonstrating different forms for SIS and SIR disease spreading (e.g. under the SIR model our method counts self-avoiding walks). In comparison to existing methods to infer the spreading efficiency of different nodes in the network (based on degree, k-shell decomposition analysis and different centrality measures), our method directly considers the spreading process, and as such is unique in providing estimation of actual numbers of infections. Crucially, in simulating infections on various real-world networks with the SIR model, we show that our walks-based method improves the inference of effectiveness of nodes over a wide range of infection rates compared to existing methods. We also analyse the trade-off between estimate accuracy and computational cost of our method, showing that the better accuracy here can still be obtained at a comparable computational cost to other methods.

Decentralized problem solving works better on some problems than others. According to an article from SEO Theory, swarms work in situations that involve discovery, testing, and comparing results. For example, ants finding the most efficient route to food, or iPhone users who use their Yelp App to find the highest-rated restaurant close by. By leveraging the volume of agents involved, you can act upon complex and rapidly changing sets of data. This works in situations where you are looking for the most efficient method of execution, or the most optimal process. Shipping companies, for example, use Swarm Theory computer algorithms to reallocate trucking routes based on up-to-the-minute energy prices. Swarm Theory works less effectively for creative processes like innovation, except perhaps as a broad directional pointer. A Swarm cannot paint the next Mona Lisa.

Via Fabio Vanni

Here are some brief notes about how Google fosters collective intelligence through the structure of the organisation, values and culture.

Via Spaceweaver

|

By Steve Mills

Senior Vice President and Group Executive

IBM

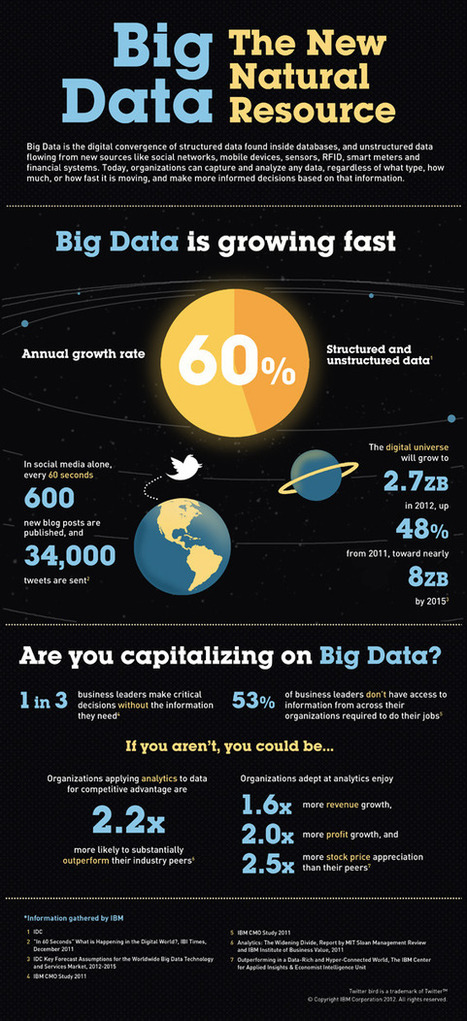

Frustration with “information overload” is one of the facts of life these days.

Via Spaceweaver

The very notion of social network implies that linked individuals interact repeatedly with each other. This notion allows them not only to learn successful strategies and adapt to them, but also to condition their own behavior on the behavior of others, in a strategic forward looking manner. Game theory of repeated games shows that these circumstances are conducive to the emergence of collaboration in simple games of two players. We investigate the extension of this concept to the case where players are engaged in a local contribution game and show that rationality and credibility of threats identify a class of Nash equilibria—that we call “collaborative equilibria”—that have a precise interpretation in terms of subgraphs of the social network. For large network games, the number of such equilibria is exponentially large in the number of players. When incentives to defect are small, equilibria are supported by local structures whereas when incentives exceed a threshold they acquire a nonlocal nature, which requires a “critical mass” of more than a given fraction of the players to collaborate. Therefore, when incentives are high, an individual deviation typically causes the collapse of collaboration across the whole system. At the same time, higher incentives to defect typically support equilibria with a higher density of collaborators. The resulting picture conforms with several results in sociology and in the experimental literature on game theory, such as the prevalence of collaboration in denser groups and in the structural hubs of sparse networks.

|

Suggested by

Hiroki Sayama

|

Social contagion: What do we really know? by Duncan Watts

|

Suggested by

Hiroki Sayama

|

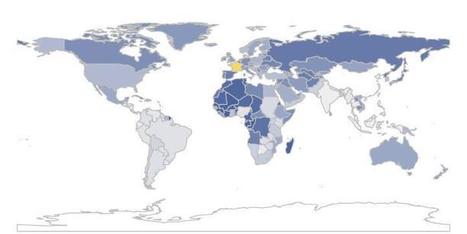

Facebook Data Team wrote a note titled Mapping Global Friendship Ties. Read the full text here.

Science assessments indicate that human activities are moving several of Earth's sub-systems outside the range of natural variability typical for the previous 500,000 years. Human societies must now change course and steer away from critical tipping points in the Earth system that might lead to rapid and irreversible change. This requires fundamental reorientation and restructuring of national and international institutions toward more effective Earth system governance and planetary stewardship.

Social networks affect in such a fundamental way the dynamics of the population they support that the global, population-wide behavior that one observes often bears no relation to the individual processes it stems from. Up to now, linking the global networked dynamics to such individual mechanisms has remained elusive.

Identifying and removing spurious links in complex networks is meaningful for many real applications and is crucial for improving the reliability of network data, which, in turn, can lead to a better understanding of the highly interconnected nature of various social, biological, and communication systems. In this paper, we study the features of different simple spurious link elimination methods, revealing that they may lead to the distortion of networks’ structural and dynamical properties. Accordingly, we propose a hybrid method that combines similarity-based index and edge-betweenness centrality. We show that our method can effectively eliminate the spurious interactions while leaving the network connected and preserving the network's functionalities.

Complexity science unifies some forty diverse features that arise from the evolution of the civil system and these underlie theory development in the futures field. The main features of an evolutionary methodology deal with emergence, macrolaws, civil or societal transitions, macrosystem design, and the absorption of extreme events. The following principles apply: (1) The civil system is an open system in which investment capital is the system growth parameter that drives it away from equilibrium, with the formation of spatial structure. (2) The historical circumstances of human settlements provide a path dependency in respect of natural resources, defence, energy, transport, or communications. (3) Emergent properties arise within a complex adaptive system from which a theory of the system can be formulated, and these are not deducible from the features of the transacting entities. (4) Futures research identifies the conditions that will lead to an irreversible civil or societal phase transition to a new stage of development. (5) Emergent behaviour in the macrostructure at regional or continental levels can be influenced through critical intervention points in the global macrosystems.

The Turing Test (TT) checks for human intelligence, rather than any putative

general intelligence. It involves repeated interaction requiring learning in

the form of adaption to the human conversation partner. It is a macro-level

post-hoc test in contrast to the definition of a Turing Machine (TM), which is

a prior micro-level definition. This raises the question of whether learning is

just another computational process, i.e. can be implemented as a TM. Here we

argue that learning or adaption is fundamentally different from computation,

though it does involve processes that can be seen as computations. (…) We conclude three things, namely that: a

purely "designed" TM will never pass the TT; that there is no such thing as a

general intelligence since it necessary involves learning; and that

learning/adaption and computation should be clearly distinguished.

In this paper we report our findings on the analysis of two large datasets representing the friendship structure of the well-known Facebook network. In particular, we discuss the quantitative assessment of the strength of weak ties Granovetter's theory, considering the problem from the perspective of the community structure of the network. We describe our findings providing some clues of the validity of this theory also for a large-scale online social network such as Facebook.

Complexity Engineering encompasses a set of approaches to engineering systems which are typically composed of various interacting entities often exhibiting self-* behaviours and emergence. The engineer or designer uses methods that benefit from the findings of complexity science and often considerably differ from the classical engineering approach of ‘divide and conquer’. This article provides an overview on some very interdisciplinary and innovative research areas and projects in the field of Complexity Engineering, including synthetic biology, chemistry, artificial life, self-healing materials and others. It then classifies the presented work according to five types of nature-inspired technology, namely: (1) using technology to understand nature, (2) nature- inspiration for technology, (3) using technology on natural systems, (4) using biotechnology methods in software engineering, and (5) using technology to model nature. Finally, future trends in Complexity Engineering are indicated and related risks are discussed.

Guest bloggers sound off on solutions for the future. Eight change accelerators in energy, mobility and design start the conversation, and you join in.

Via Alessio Erioli, proto-e-co-logics

|

Your new post is loading...

Your new post is loading...

Your new post is loading...

Your new post is loading...