Your new post is loading...

Your new post is loading...

Tetsushi Ohdaira Chaos, Solitons & Fractals

Volume 182, May 2024, 114754 • The universal probabilistic reward based on the difference of payoff is proposed.

• The greater payoff difference leads to the higher rewarding probability.

• This new reward mechanism effectively enhances the evolution of cooperation. Read the full article at: www.sciencedirect.com

Julyan H. E. Cartwright, Jitka Čejková, Elena Fimmel, Simone Giannerini, Diego Luis Gonzalez, Greta Goracci, Clara Grácio, Jeanine Houwing-Duistermaat, Dragan Matić, Nataša Mišić, Frans A. A. Mulder, Oreste Piro Artificial Life (2024) 30 (1): 16–27. In the mid-20th century, two new scientific disciplines emerged forcefully: molecular biology and information-communication theory. At the beginning, cross-fertilization was so deep that the term genetic code was universally accepted for describing the meaning of triplets of mRNA (codons) as amino acids. However, today, such synergy has not taken advantage of the vertiginous advances in the two disciplines and presents more challenges than answers. These challenges not only are of great theoretical relevance but also represent unavoidable milestones for next-generation biology: from personalized genetic therapy and diagnosis to Artificial Life to the production of biologically active proteins. Moreover, the matter is intimately connected to a paradigm shift needed in theoretical biology, pioneered a long time ago, that requires combined contributions from disciplines well beyond the biological realm. The use of information as a conceptual metaphor needs to be turned into quantitative and predictive models that can be tested empirically and integrated in a unified view. Successfully achieving these tasks requires a wide multidisciplinary approach, including Artificial Life researchers, to address such an endeavour. Read the full article at: direct.mit.edu

AMBRA AMICO, LUCA VERGINER, GIONA CASIRAGHI, GIACOMO VACCARIO, AND FRANK SCHWEITZER

SCIENCE ADVANCES

17 Jan 2024

Vol 10, Issue 3 Supply chain disruptions may cause shortages of essential goods, affecting millions of individuals. We propose a perspective to address this problem via reroute flexibility. This is the ability to substitute and reroute products along existing pathways, hence without requiring the creation of new connections. To showcase the potential of this approach, we examine the US opioid distribution system. We reconstruct over 40 billion distribution routes and quantify the effectiveness of reroute flexibility in mitigating shortages. We demonstrate that flexibility (i) reduces the severity of shortages and (ii) delays the time until they become critical. Moreover, our findings reveal that while increased flexibility alleviates shortages, it comes at the cost of increased complexity: We demonstrate that reroute flexibility increases alternative path usage and slows down the distribution system. Our method enhances decision-makers’ ability to manage the resilience of supply chains. Read the full article at: www.science.org

Alan Dorin, Susan Stepney Artificial Life (2024) 30 (1): 1–15. The field called Artificial Life (ALife) coalesced following a workshop organized by Chris Langton in September 1987 (Langton, 1988a). That meeting drew together work that had been largely carried out from the 1950s through to the 1980s. A few years later, Langton became the founding editor of this journal, Artificial Life, which started its life with Volume 1, Issue 1_2 in the (northern) winter of 1993/1994.1 This current issue therefore begins the 30th volume and 30th year of Artificial Life. We think this is a milestone worth celebrating!

In the proceedings of that first workshop, Langton famously defined ALife as the study of “life as it could be,” of “possible life,” in contrast to biology’s study of “life as we know it to be” (on Earth). His stated aim was to derive “a truly general theoretical biology capable of making universal statements about life wherever it may be found and whatever it may be made of ” (Langton, 1988b, p. xvi). Read the full article at: direct.mit.edu

Michele Avalle, Niccolò Di Marco, Gabriele Etta, Emanuele Sangiorgio, Shayan Alipour, Anita Bonetti, Lorenzo Alvisi, Antonio Scala, Andrea Baronchelli, Matteo Cinelli & Walter Quattrociocchi

Nature (2024) Growing concern surrounds the impact of social media platforms on public discourse1,2,3,4 and their influence on social dynamics5,6,7,8,9, especially in the context of toxicity10,11,12. Here, to better understand these phenomena, we use a comparative approach to isolate human behavioural patterns across multiple social media platforms. In particular, we analyse conversations in different online communities, focusing on identifying consistent patterns of toxic content. Drawing from an extensive dataset that spans eight platforms over 34 years—from Usenet to contemporary social media—our findings show consistent conversation patterns and user behaviour, irrespective of the platform, topic or time. Notably, although long conversations consistently exhibit higher toxicity, toxic language does not invariably discourage people from participating in a conversation, and toxicity does not necessarily escalate as discussions evolve. Our analysis suggests that debates and contrasting sentiments among users significantly contribute to more intense and hostile discussions. Moreover, the persistence of these patterns across three decades, despite changes in platforms and societal norms, underscores the pivotal role of human behaviour in shaping online discourse. Read the full article at: www.nature.com

|

Suggested by

Hector Zenil

|

Felipe S. Abrahão, Santiago Hernández-Orozco, Narsis A. Kiani, Jesper Tegnér, Hector Zenil We demonstrate that Assembly Theory, pathway complexity, the assembly index, and the assembly number are subsumed and constitute a weak version of algorithmic (Kolmogorov-Solomonoff-Chaitin) complexity reliant on an approximation method based upon statistical compression, their results obtained due to the use of methods strictly equivalent to the LZ family of compression algorithms used in compressing algorithms such as ZIP, GZIP, or JPEG. Such popular algorithms have been shown to empirically reproduce the results of AT's assembly index and their use had already been reported in successful application to separating organic from non-organic molecules, and the study of selection and evolution. Here we exhibit and prove the connections and full equivalence of Assembly Theory to Shannon Entropy and statistical compression, and AT's disconnection as a statistical approach from causality. We demonstrate that formulating a traditional statistically compressed description of molecules, or the theory underlying it, does not imply an explanation or quantification of biases in generative (physical or biological) processes, including those brought about by selection and evolution, when lacking in logical consistency and empirical evidence. We argue that in their basic arguments, the authors of AT conflate how objects may assemble with causal directionality, and conclude that Assembly Theory does nothing to explain selection or evolution beyond known and previously established connections, some of which are reviewed here, based on sounder theory and better experimental evidence. Read the full article at: arxiv.org

See Also: Assembly Theory: What It Does and What It Does Not Do Molecular assembly indices of mineral heteropolyanions: some abiotic molecules are as complex as large biomolecules

HONGZHONG DENG, JI LI, HONGQIAN WU, and BINGFENG GE Advances in Complex SystemsVol. 26, No. 07n08, 2350011 System structure can affect or decide the system function. Many pioneers have analyzed the impact of system’s macro-statistical characteristics, such as degree distribution and giant component, on system performance. But only few research works were conducted on the relation of mesoscopic structure and agent property with system task performance. In this paper, we designed a scenario that, in a multiagent system, agents will try their best to form a qualified team to fulfill more system tasks under the requirements from agent property, structure and task. The theoretical and simulation results show that the agent link network, agent properties and task requirement will co-affect the dynamic team formation and at last have serious effects on a system’s task completion ratio and performance. Some factors such as network density and task introduction period have positive influence. Task execution time and team size have negative influence. Some factors show a counter-intuitive influence. The clustering coefficient has not much influence as people expected and the task publicity time isn’t bigger the better. Notably, system performance is affected by the coupling effect, instead of the independent effects of all factors. The effect of system structure on system function conditionally relies on the support from agent ability and task requirement. Read the full article at: www.worldscientific.com

Cory Glover, Albert-László Barabási

The links of a physical network cannot cross, which often forces the network layout into non-optimal entangled states. Here we define a network fabric as a two-dimensional projection of a network and propose the average crossing number as a measure of network entanglement. We analytically derive the dependence of the crossing number on network density, average link length, degree heterogeneity, and community structure and show that the predictions accurately estimate the entanglement of both network models and of real physical networks. Read the full article at: arxiv.org

Giona Casiraghi, Georges Andres

Changes in the timescales at which complex systems evolve are essential to predicting critical transitions and catastrophic failures. Disentangling the timescales of the dynamics governing complex systems remains a key challenge. With this study, we introduce an integrated Bayesian framework based on temporal network models to address this challenge. We focus on two methodologies: change point detection for identifying shifts in system dynamics, and a spectrum analysis for inferring the distribution of timescales. Applied to synthetic and empirical datasets, these methologies robustly identify critical transitions and comprehensively map the dominant and subsidiaries timescales in complex systems. This dual approach offers a powerful tool for analyzing temporal networks, significantly enhancing our understanding of dynamic behaviors in complex systems. Read the full article at: arxiv.org

Tim Bayne, Anil K. Seth, Marcello Massimini, Joshua Shepherd, Axel Cleeremans, Stephen M. Fleming, Rafael Malach, Jason B. Mattingley, David K. Menon, Adrian M. Owen, Megan A.K. Peters, Adeel Razi, Liad Mudrik Trends in Cognitive Science Which systems/organisms are conscious? New tests for consciousness (‘C-tests’) are

urgently needed. There is persisting uncertainty about when consciousness arises in

human development, when it is lost due to neurological disorders and brain injury,

and how it is distributed in nonhuman species. This need is amplified by recent and

rapid developments in artificial intelligence (AI), neural organoids, and xenobot

technology. Although a number of C-tests have been proposed in recent years, most

are of limited use, and currently we have no C-tests for many of the populations in

which they are most urgently needed. Here, we identify challenges facing any attempt

to develop C-tests, propose a multidimensional classification of such tests, and identify

strategies that might be used to validate them. Read the full article at: www.cell.com

Thomas P. Wytock and Adilson E. Motter PNAS 121 (11) e2312942121 The lack of genome-wide mathematical models for the gene regulatory network complicates the application of control theory to manipulate cell behavior in humans. We address this challenge by developing a transfer learning approach that leverages genome-wide transcriptomic profiles to characterize cell type attractors and perturbation responses. These responses are used to predict a combinatorial perturbation that minimizes the transcriptional difference between an initial and target cell type, bringing the regulatory network to the target cell type basin of attraction. We anticipate that this approach will enable the rapid identification of potential targets for treatment of complex diseases, while also providing insight into how the dynamics of gene regulatory networks affect phenotype.

Read the full article at: www.pnas.org

Alice D. Bridges, Amanda Royka, Tara Wilson, Charlotte Lockwood, Jasmin Richter, Mikko Juusola & Lars Chittka

Nature (2024) Culture refers to behaviours that are socially learned and persist within a population over time. Increasing evidence suggests that animal culture can, like human culture, be cumulative: characterized by sequential innovations that build on previous ones1. However, human cumulative culture involves behaviours so complex that they lie beyond the capacity of any individual to independently discover during their lifetime1,2,3. To our knowledge, no study has so far demonstrated this phenomenon in an invertebrate. Here we show that bumblebees can learn from trained demonstrator bees to open a novel two-step puzzle box to obtain food rewards, even though they fail to do so independently. Experimenters were unable to train demonstrator bees to perform the unrewarded first step without providing a temporary reward linked to this action, which was removed during later stages of training. However, a third of naive observer bees learned to open the two-step box from these demonstrators, without ever being rewarded after the first step. This suggests that social learning might permit the acquisition of behaviours too complex to ‘re-innovate’ through individual learning. Furthermore, naive bees failed to open the box despite extended exposure for up to 24 days. This finding challenges a common opinion in the field: that the capacity to socially learn behaviours that cannot be innovated through individual trial and error is unique to humans. Read the full article at: www.nature.com

Pablo Gallarta-Sáenz, Hugo Pérez-Martínez, Jesús Gómez-Gardeñes Chaos 34, 033106 (2024) In this work, we analyze how reputation-based interactions influence the emergence of innovations. To do so, we make use of a dynamic model that mimics the discovery process by which, at each time step, a pair of individuals meet and merge their knowledge to eventually result in a novel technology of higher value. The way in which these pairs are brought together is found to be crucial for achieving the highest technological level. Our results show that when the influence of reputation is weak or moderate, it induces an acceleration of the discovery process with respect to the neutral case (purely random coupling). However, an excess of reputation is clearly detrimental, because it leads to an excessive concentration of knowledge in a small set of people, which prevents a diversification of the technologies discovered and, in addition, leads to societies in which a majority of individuals lack technical capabilities. Read the full article at: pubs.aip.org

|

Inman Harvey Artificial Life (2024) 30 (1): 48–64. We survey the general trajectory of artificial intelligence (AI) over the last century, in the context of influences from Artificial Life. With a broad brush, we can divide technical approaches to solving AI problems into two camps: GOFAIstic (or computationally inspired) or cybernetic (or ALife inspired). The latter approach has enabled advances in deep learning and the astonishing AI advances we see today—bringing immense benefits but also societal risks. There is a similar divide, regrettably unrecognized, over the very way that such AI problems have been framed. To date, this has been overwhelmingly GOFAIstic, meaning that tools for humans to use have been developed; they have no agency or motivations of their own. We explore the implications of this for concerns about existential risk for humans of the “robots taking over.” The risks may be blamed exclusively on human users—the robots could not care less. Read the full article at: direct.mit.edu

Francis Heylighen, Shima Beigi, and Tomas Veloz Systems 2024, 12(4), 111 This paper summarizes and reviews Chemical Organization Theory (COT), a formalism for the analysis of complex, self-organizing systems across multiple disciplines. Its elements are resources and reactions. A reaction maps a set of resources onto another set, thus representing an elementary process that transforms resources into new resources. Reaction networks self-organize into invariant subnetworks, called ‘organizations’, which are attractors of their dynamics. These are characterized by closure (no new resources are added) and self-maintenance (no existing resources are lost). Thus, they provide a simple model of autopoiesis: the organization persistently recreates its own components. The resilience of organizations in the face of perturbations depends on properties such as the size of their basin of attraction and the redundancy of their reaction pathways. Application domains of COT include the origin of life, systems biology, cognition, ecology, Gaia theory, sustainability, consciousness, and social systems. Read the full article at: www.mdpi.com

Patrick McMillen & Michael Levin Communications Biology volume 7, Article number: 378 (2024) A defining feature of biology is the use of a multiscale architecture, ranging from molecular networks to cells, tissues, organs, whole bodies, and swarms. Crucially however, biology is not only nested structurally, but also functionally: each level is able to solve problems in distinct problem spaces, such as physiological, morphological, and behavioral state space. Percolating adaptive functionality from one level of competent subunits to a higher functional level of organization requires collective dynamics: multiple components must work together to achieve specific outcomes. Here we overview a number of biological examples at different scales which highlight the ability of cellular material to make decisions that implement cooperation toward specific homeodynamic endpoints, and implement collective intelligence by solving problems at the cell, tissue, and whole-organism levels. We explore the hypothesis that collective intelligence is not only the province of groups of animals, and that an important symmetry exists between the behavioral science of swarms and the competencies of cells and other biological systems at different scales. We then briefly outline the implications of this approach, and the possible impact of tools from the field of diverse intelligence for regenerative medicine and synthetic bioengineering. Read the full article at: www.nature.com

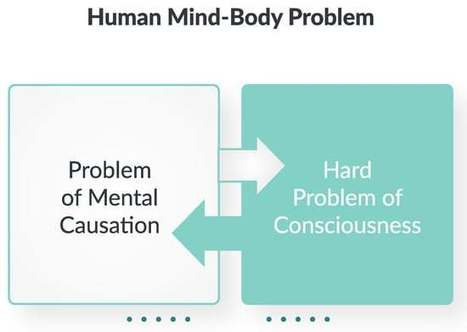

Tom Froese Entropy 2024, 26(4), 288 Cognitive science is confronted by several fundamental anomalies deriving from the mind–body problem. Most prominent is the problem of mental causation and the hard problem of consciousness, which can be generalized into the hard problem of agential efficacy and the hard problem of mental content. Here, it is proposed to accept these explanatory gaps at face value and to take them as positive indications of a complex relation: mind and matter are one, but they are not the same. They are related in an efficacious yet non-reducible, non-observable, and even non-intelligible manner. Natural science is well equipped to handle the effects of non-observables, and so the mind is treated as equivalent to a hidden ‘black box’ coupled to the body. Two concepts are introduced given that there are two directions of coupling influence: (1) irruption denotes the unobservable mind hiddenly making a difference to observable matter, and (2) absorption denotes observable matter hiddenly making a difference to the unobservable mind. The concepts of irruption and absorption are methodologically compatible with existing information-theoretic approaches to neuroscience, such as measuring cognitive activity and subjective qualia in terms of entropy and compression, respectively. By offering novel responses to otherwise intractable theoretical problems from first principles, and by doing so in a way that is closely connected with empirical advances, irruption theory is poised to set the agenda for the future of the mind sciences. Read the full article at: www.mdpi.com

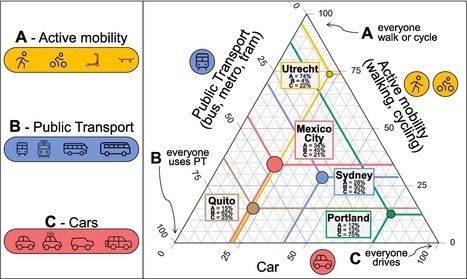

Rafael Prieto-Curiel, Juan P. Ospina Environment International Volume 185, March 2024, 108541 The use of cars in cities has many negative impacts, including pollution, noise and the use of space. Yet, detecting factors that reduce the use of cars is a serious challenge, particularly across different regions. Here, we model the use of various modes of transport in a city by aggregating Active mobility (A), Public Transport (B) and Cars (C), expressing the modal share of a city by its ABC triplet. Data for nearly 800 cities across 61 countries is used to model car use and its relationship with city size and income. Our findings suggest that with longer distances and the congestion experienced in large cities, Active mobility and journeys by Car are less frequent, but Public Transport is more prominent. Further, income is strongly related to the use of cars. Results show that a city with twice the income has 37% more journeys by Car. Yet, there are significant differences across regions. For cities in Asia, Public Transport contributes to a substantial share of their journeys. For cities in the US, Canada, Australia, and New Zealand, most of their mobility depends on Cars, regardless of city size. In Europe, there are vast heterogeneities in their modal share, from cities with mostly Active mobility (like Utrecht) to cities where Public Transport is crucial (like Paris or London) and cities where more than two out of three of their journeys are by Car (like Rome and Manchester). Read the full article at: www.sciencedirect.com

Xiao Yang, Réka Albert, Lauren Molloy Elreda, & Nilam Ram Social influence processes can induce desired or undesired behavior change in individual members of a group. Empirical modeling of group processes and the design of network-based interventions meant to promote desired behavior change is somewhat limited be-cause the models often assume that the social influence is assimilative only and that the networks are not fully connected. We introduce a Boolean network method that addresses these two limitations. In line with dynamical systems principles, temporal changes in group members’ behavior are modeled as a Boolean network that also allows for application of control theory design of group management strategies that might direct the groups to-wards desired behavior. To illustrate the utility of the method for psychology, we apply the Boolean network method to empirical data of individuals’ self-disclosure behavior in multi-week therapy groups (N = 135, 18 groups, T = 10 ∼ 16 weeks). Empirical results provide descrip-tion of each group member’s pattern of self-disclosure and social influence and identification of group-specific network control strategies that would elicit self-disclosure from the majori-ty of the group. Of the 18 group models, 16 included both assimilative and repulsive social in-fluence. Useful control strategies were not needed for 10 already well-functioning groups, were identified for 6 groups, and were not available for 2 groups. The findings illustrate the utility of the Boolean network method for modeling the simultaneous existence of assimila-tive and repulsive social influence processes in small groups, and developing strategies that may direct groups toward desired states without manipulating social ties. Read the full article at: advances.in

Josh C. Bongard Communications of the ACM The automated design, construction, and deployment of autonomous and adaptive machines is an open problem. Industrial robots are an example of autonomous yet nonadaptive machines: they execute the same sequence of actions repeatedly. Conversely, unmanned drones are an example of adaptive yet non-autonomous machines: they exhibit the adaptive capabilities of their remote human operators. To date, the only force known to be capable of producing fully autonomous as well as adaptive machines is biological evolution. In the field of evolutionary robotics,9 one class of population-based metaheuristics—evolutionary algorithms—are used to optimize some or all aspects of an autonomous robot. The use of metaheuristics sets this subfield of robotics apart from the mainstream of robotics research, in which machine learning algorithms are used to optimize the control policya of a robot. As in other branches of computer science the use of a metaheuristic algorithm has a cost and a benefit. The cost is that it is not possible to guarantee if (or when) an optimal control policy will be found for a given robot. The benefit is few assumptions must be made about the problem: evolutionary algorithms can improve both the parameters and the architecture of the robot’s control policy, and even the shape of the robot itself. Read the full article at: cacm.acm.org

Jordi Piñero, Ricard Solé, and Artemy Kolchinsky

Phys. Rev. Research 6, 013275 Harvesting free energy from the environment is essential for the operation of many biological and artificial systems. We use techniques from stochastic thermodynamics to investigate the maximum rate of harvesting achievable by optimizing a set of reactions in a Markovian system, possibly under various kinds of topological, kinetic, and thermodynamic constraints. This question is relevant for the optimal design of new harvesting devices as well as for quantifying the efficiency of existing systems. We first demonstrate that the maximum harvesting rate can be expressed as a constrained convex optimization problem. We illustrate it on bacteriorhodopsin, a light-driven proton pump from Archaea, which we find is close to optimal under realistic conditions. In our second result, we solve the optimization problem in closed-form in three physically meaningful limiting regimes. These closed-form solutions are illustrated on two idealized models of unicyclic harvesting systems. Read the full article at: link.aps.org

Wagner, N. Systems 2024, 12(3), 96 Modeling and simulation of complex systems frequently requires capturing probabilistic dynamics across multiple scales and/or multiple domains. Cyber–physical, cyber–social, socio–technical, and cyber–physical–social systems are common examples. Modeling and simulating such systems via a single, all-encompassing model is often infeasible, and thus composable modeling techniques are sought. Co-simulation and closure modeling are two prevalent composable modeling techniques that divide a multi-scale/multi-domain system into sub-systems, use smaller component models to capture each sub-system, and coordinate data transfer between component models. While the two techniques have similar goals, differences in their methods lead to differences in the complexity and computational efficiency of a simulation model built using one technique or the other. This paper presents a probabilistic analysis of the complexity and computational efficiency of these two composable modeling techniques for multi-scale/multi-domain complex system modeling and simulation applications. The aim is twofold: to promote awareness of these two composable modeling approaches and to facilitate complex system model design by identifying circumstances that are amenable to either approach. Read the full article at: www.mdpi.com

Matteo Chinazzi, Jessica T. Davis, Ana Pastore y Piontti, Kunpeng Mu, Nicolò Gozzi, Marco Ajelli, Nicola Perra, Alessandro Vespignani Epidemics The Scenario Modeling Hub (SMH) initiative provides projections of potential epidemic scenarios in the United States (US) by using a multi-model approach. Our contribution to the SMH is generated by a multiscale model that combines the global epidemic metapopulation modeling approach (GLEAM) with a local epidemic and mobility model of the US (LEAM-US), first introduced here. The LEAM-US model consists of 3142 subpopulations each representing a single county across the 50 US states and the District of Columbia, enabling us to project state and national trajectories of COVID-19 cases, hospitalizations, and deaths under different epidemic scenarios. The model is age-structured, and multi-strain. It integrates data on vaccine administration, human mobility, and non-pharmaceutical interventions. The model contributed to all 17 rounds of the SMH, and allows for the mechanistic characterization of the spatio-temporal heterogeneities observed during the COVID-19 pandemic. Here we describe the mathematical and computational structure underpinning our model, and present as a case study the results concerning the emergence of the SARS-CoV-2 Alpha variant (lineage designation B.1.1.7). Our findings reveal considerable spatial and temporal heterogeneity in the introduction and diffusion of the Alpha variant, both at the level of individual states and combined statistical areas, as it competes against the ancestral lineage. We discuss the key factors driving the time required for the Alpha variant to rise to dominance within a population, and quantify the significant impact that the emergence of the Alpha variant had on the effective reproduction number at the state level. Overall, we show that our multiscale modeling approach is able to capture the complexity and heterogeneity of the COVID-19 pandemic response in the US. Read the full article at: www.sciencedirect.com

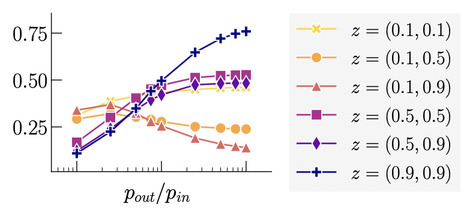

Antoine Vendeville, Shi Zhou, and Benjamin Guedj

Phys. Rev. E 109, 024312 Online social networks have become primary means of communication. As they often exhibit undesirable effects such as hostility, polarization, or echo chambers, it is crucial to develop analytical tools that help us better understand them. In this paper we are interested in the evolution of discord in social networks. Formally, we introduce a method to calculate the probability of discord between any two agents in the multistate voter model with and without zealots. Our work applies to any directed, weighted graph with any finite number of possible opinions, allows for various update rates across agents, and does not imply any approximation. Under certain topological conditions, the opinions are independent and the joint distribution can be decoupled. Otherwise, the evolution of discord probabilities is described by a linear system of ordinary differential equations. We prove the existence of a unique equilibrium solution, which can be computed via an iterative algorithm. The classical definition of active links density is generalized to take into account long-range, weighted interactions. We illustrate our findings on real-life and synthetic networks. In particular, we investigate the impact of clustering on discord and uncover a rich landscape of varied behaviors in polarized networks. This sheds lights on the evolution of discord between, and within, antagonistic communities.

Heather Z. Brooks, Mason A. Porter We study the spreading dynamics of content on networks. To do this, we use a model in which content spreads through a bounded-confidence mechanism. In a bounded-confidence model (BCM) of opinion dynamics, the agents of a network have continuous-valued opinions, which they adjust when they interact with agents whose opinions are sufficiently close to theirs. The employed content-spread model introduces a twist into BCMs by using bounded confidence for the content spread itself. To study the spread of content, we define an analogue of the basic reproduction number from disease dynamics that we call an \emph{opinion reproduction number}. A critical value of the opinion reproduction number indicates whether or not there is an ``infodemic'' (i.e., a large content-spreading cascade) of content that reflects a particular opinion. By determining this critical value, one can determine whether or not an opinion will die off or propagate widely as a cascade in a population of agents. Using configuration-model networks, we quantify the size and shape of content dissemination using a variety of summary statistics, and we illustrate how network structure and spreading model parameters affect these statistics. We find that content spreads most widely when the agents have large expected mean degree or large receptiveness to content. When the amount of content spread only slightly exceeds the critical opinion reproduction number (i.e., the infodemic threshold), there can be longer dissemination trees than when the expected mean degree or receptiveness is larger, even though the total number of content shares is smaller. Read the full article at: arxiv.org

|

Your new post is loading...

Your new post is loading...